“It was running fine…”

In our performance consulting work, we often hear variations of the following: “Our web application was running fine with a few hundred users. Now when we run a promotion with our new partner and get a thousand users coming in at one time, it grinds to a halt.”

We’ve heard this from startup founders, product managers, development team leads, CTOs, and others who see their product gaining traction, but simultaneously see performance falling off a cliff. Often this situation is characterized as a “good problem to have” until you’re the technical person who needs to solve the problem—and quickly. User experience is suffering and it’s the worst possible time with the product taking off.

We don’t know just how often this occurs, but judging from the calls we get there are lots of anecdotal examples. So, why does this happen? Well, there are a number of technical reasons for applications suffering performance issues. Too often though, it’s the result of performance needs not being properly assessed at the start of work. The “good problem to have” mentality led to a collective blind eye to performance along the way.

Our goal in this article is to paint a realistic picture of how to think about performance early in the life of an application.

What do we mean by performance?

Web application performance is a broad discipline. Here, when we speak of performance, we are specifically referring to the speed of the application in providing responses to requests. This article is focused on the server side, but we encourage you to also look at your application holistically.

Speaking of the server, high performance encompasses the following attributes:

- Low latency. A high-performance application will minimize latency and provide responses to user actions very quickly. Generally speaking, the quicker the better: ~0ms is superb; 100ms is good; 500ms is okay; a few seconds is bad; and several seconds may be approaching awful.

- High throughput. A high-performance application will be able to service a large number of requests simultaneously, staying ahead of the incoming demand so that any short-term queuing of requests remains short-term. Commonly, high-throughput and low-latency go hand-in-hand.

- Performing well across a spectrum of use-cases. A high-performance application functions well with a wide variety of request types (some cheap, some expensive). A well-performing application will not slow, block, or otherwise frustrate the fulfillment of requests based on other users’ concurrent activities. Conversely, a low-performance application might have an architectural bottleneck through which many request types flow (e.g., a single-threaded search engine).

- Generally respecting the value of users’ time. Overall, performance is in the service of user expectations. A user knows low performance when they see it, and unfortunately, they won’t usually tell you if the performance doesn’t meet their expectations; they’ll just leave.

- Scales with concurrency. A high-performance application provides sufficient capacity to handle a reasonable amount of concurrent usage. Handling 1 user is nothing; 5 concurrently is easy; 500 is good; 50,000 is hard.

- Scales with data size. In many applications, network effects mean that as usage grows, so does the amount of “hot” data in play. A high-performance application is designed to perform well with a reasonable amount of hot data. A match-making system with 200 entities is trivial; 20,000 entities is good; 2,000,000 entities is hard.

- Performs modestly complex calculations without requiring complex architecture. A high-performance application (notably, one based on a high-performance platform) will be capable of performing modestly complex algorithmic work on behalf of users without necessarily requiring the construction of a complex system architecture. (Though to be clear, no platform is infinitely performant; so at some point an algorithm’s complexity will require more complex architecture.)

What are your application’s performance needs?

It helps to start by determining whether your application needs, or will ever need to consider performance more than superficially.

We routinely see three situations with respect to application performance needs:

- Applications with known high-performance needs. These are applications that, for example, expect to see large data or complex algorithmic work from day one. Examples would be applications that match users based on non-trivial math, or make recommendations based on some amount of analysis of past and present behavior, or process large documents on the users’ behalf. You should go through each of the aspects in the previous section to consider what the performance characteristics are of your application.

- Applications with known low-performance needs. Some applications are built for the exclusive use of a small number of users with a known volume of data. In these scenarios, the needed capacity can be calculated fairly simply and isn’t expected to change during the application’s usable lifetime. Examples would be intranet or special-purpose B2B applications.

- Applications with as-yet unknown performance needs. We find a lot of people don’t know really how their application will be used or how many people will be using it. Either they haven’t put a lot of thought into performance matters, the complexity of key algorithms isn’t yet known, or the business hasn’t yet explored user interest levels.

If your application is in the known low-performance tier, the only advantage of high-performance foundation technologies (all else being equal) would be reducing long term hosting costs. Congratulations, you can stop reading here!

But for the rest of us, those of us lucky/unlucky enough to know we need high-performance, or are uncertain whether performance is a concern, and for whom I write the remainder of this article, performance should be on our minds early. Either we know performance is important, or we don’t know if performance is important but want to plan to avoid unnecessary pain if it turns out to be important. In either case, it is in our best interest to spend a modest amount of time and thought to plan accordingly.

Sometimes we will hear retrospectives suggesting an application had been working acceptably for a few months but eventually bogged down. Therefore, for new projects with unknown performance needs, we often advise to select technology platforms that do not constrain your optimization efforts unnecessarily if and when performance does become a problem. Give yourself the luxury to scale without replatforming by working in your application’s domain.

Performance in your technology selection process

Many of us at TechEmpower are interested in performance and how it affects user experience. Personal anecdotes of frustration with slow-responding applications are legion. Meanwhile, Amazon famously claimed 1% fewer sales for each additional 100ms of latency. And retail sites are not alone; all sites will silently lose visitors who are made to wait. Todd Hoff’s comprehensive 2009 blog entry on the costs of latency in web applications is still relevant today.

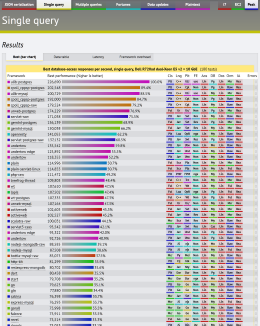

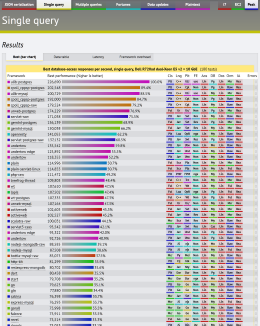

Framework Benchmarks

We created our Web Framework Benchmarks project because we’ve run into situations where well known frameworks seem to cause significant performance pain for the applications built upon them. The nature of our business sees us working with a broad spectrum of technologies and we wanted to know roughly what to expect, performance wise, from each.

High-performance and high-scalability technologies are so numerous that understanding the landscape can feel overwhelming. In our Framework Benchmarks project, as of this writing, we have about 300 permutations covering 145 frameworks, and a huge universe of possible permutations we don’t presently cover. With so many noteworthy options, and with the resulting performance data at hand, we feel quite strongly:

Performance should be part of your technology selection process. There are two important elements to this statement: should and part of.

- Performance should be considered because it affects user experience both in responsiveness and in resilience to expected and unexpected load. Early high-performance capacity affords you the luxury of deferring more complicated architectural decisions until later in your project’s lifespan. The data from our Framework Benchmarks project suggests that the pain of early performance problems can be avoided either in whole or in part by making informed framework and platform decisions.

- On the flip side, performance should be just part of your selection process. Performance is important, but it’s definitely not everything. Several other aspects (which we will review in more detail in an upcoming post) may be equally or more important to your selection process. Do not make decisions based exclusively on a technology having “won” a performance comparison such as ours.

“A Good Problem to Have.” Really?

A common refrain is to characterize problems that surface when your business is ramping up as good problems to have because their existence tacitly confirms your success. Though the euphemism is well-meaning, when your team is stressed out coping with “good problem” fires, you should forgive them when they don’t quite share the sentiment.

Unfortunately, if you’re only starting to think about performance after experiencing a problem, the resolution options are fewer because you have already made selections and accrued performance technical debt—call it performance debt if you will. We often hear, “Had I known it would be used this way, I would have selected something higher performance.”

While the industry has a bunch of survivor stories suggesting projects built on low-performance technology can and do succeed, not all teams find themselves with the luxury to re-platform their products to resolve performance problems, as re-platforming is a costly and painful proposition.

We therefore routinely advise leads of new projects to consider performance early. Use the performance characteristics of the languages, libraries, and frameworks you are considering as a filter during the architectural process.

Bandages

Some may have residual doubt because, when performance problems come up, so goes the line of thinking—you’ll just put a reverse proxy like Varnish in front of your application and call it a day.

Reverse proxies are terrific for what they do: caching and serving static or semi-static content. If your web application is a blog or a news site, a reverse proxy can act as a bandage to cover a wide set of performance problems inherent in the back-end system. This article is not intended to attack any particular technologies, but an obvious example is WordPress. Reverse-proxies and other front-end caching mechanisms are commonplace in WordPress deployments.

However, most web applications are not blogs or news sites. Most applications deal with dynamic information and create personalized responses. And even if your MVP is “basically a news site with a few other bells and whistles,” you should consider where you’re going after MVP. As your functionality grows beyond serving a bunch of static content, using a reverse proxy as a bandage to enable selecting a low-performance platform may end up a regrettable decision.

But to be clear, if you’re building a site where the vast majority of responses can be pre-computed and cached, you can use a reverse proxy paired with a low-performance platform. You can also use a high-performance platform without a reverse proxy. All else being equal, our preference is the architecturally simpler option that avoids adding a reverse proxy as another potential point of failure.

It’s reasonable to consider the future

Performance is not the be-all, end-all. However, technology evolution has given modern developers a wide spectrum of high-performance options, many of which can be highly-productive as well. It is reasonable, and we believe valuable, to consider performance needs early. Your future self will likely thank you.